pmalloc

由于pwn的各种漏洞需要对glibc的程序逻辑一定的了解,现在对 pmalloc 2.23 中的 malloc.c 进行源码分析。

相关结构体、变量或定义

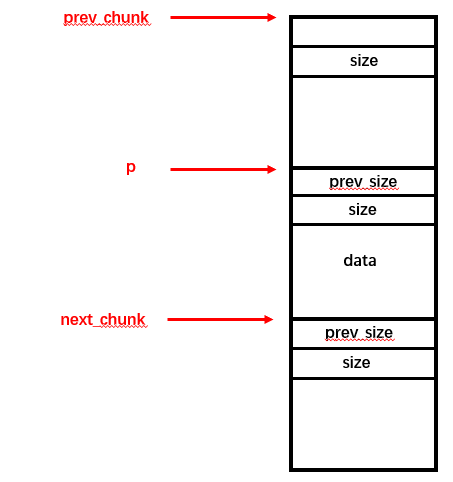

malloc_chunk

定义了 malloc 后的各种 chunk 的通用结构:

prev_size、size、fd、bk(fastbin无bk)

fd_nextsize、bk_nextsize(仅large chunk存在)

/*

This struct declaration is misleading (but accurate and necessary).

It declares a "view" into memory allowing access to necessary

fields at known offsets from a given base. See explanation below.

*/

struct malloc_chunk {

INTERNAL_SIZE_T prev_size; /* Size of previous chunk (if free). */

INTERNAL_SIZE_T size; /* Size in bytes, including overhead. */

struct malloc_chunk* fd; /* double links -- used only if free. */

struct malloc_chunk* bk;

/* Only used for large blocks: pointer to next larger size. */

struct malloc_chunk* fd_nextsize; /* double links -- used only if free. */

struct malloc_chunk* bk_nextsize;

};

/*

malloc_chunk details:

(The following includes lightly edited explanations by Colin Plumb.)

Chunks of memory are maintained using a `boundary tag' method as

described in e.g., Knuth or Standish. (See the paper by Paul

Wilson ftp://ftp.cs.utexas.edu/pub/garbage/allocsrv.ps for a

survey of such techniques.) Sizes of free chunks are stored both

in the front of each chunk and at the end. This makes

consolidating fragmented chunks into bigger chunks very fast. The

size fields also hold bits representing whether chunks are free or

in use.

An allocated chunk looks like this:

chunk-> +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

| Size of previous chunk, if allocated | |

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

| Size of chunk, in bytes |M|P|

mem-> +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

| User data starts here... .

. .

. (malloc_usable_size() bytes) .

. |

nextchunk-> +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

| Size of chunk |

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

Where "chunk" is the front of the chunk for the purpose of most of

the malloc code, but "mem" is the pointer that is returned to the

user. "Nextchunk" is the beginning of the next contiguous chunk.

Chunks always begin on even word boundaries, so the mem portion

(which is returned to the user) is also on an even word boundary, and

thus at least double-word aligned.

Free chunks are stored in circular doubly-linked lists, and look like this:

chunk-> +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

| Size of previous chunk |

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

`head:' | Size of chunk, in bytes |P|

mem-> +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

| Forward pointer to next chunk in list |

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

| Back pointer to previous chunk in list |

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

| Unused space (may be 0 bytes long) .

. .

. |

nextchunk-> +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

`foot:' | Size of chunk, in bytes |

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

The P (PREV_INUSE) bit, stored in the unused low-order bit of the

chunk size (which is always a multiple of two words), is an in-use

bit for the *previous* chunk. If that bit is *clear*, then the

word before the current chunk size contains the previous chunk

size, and can be used to find the front of the previous chunk.

The very first chunk allocated always has this bit set,

preventing access to non-existent (or non-owned) memory. If

prev_inuse is set for any given chunk, then you CANNOT determine

the size of the previous chunk, and might even get a memory

addressing fault when trying to do so.

Note that the `foot' of the current chunk is actually represented

as the prev_size of the NEXT chunk. This makes it easier to

deal with alignments etc but can be very confusing when trying

to extend or adapt this code.

The two exceptions to all this are

1. The special chunk `top' doesn't bother using the

trailing size field since there is no next contiguous chunk

that would have to index off it. After initialization, `top'

is forced to always exist. If it would become less than

MINSIZE bytes long, it is replenished.

2. Chunks allocated via mmap, which have the second-lowest-order

bit M (IS_MMAPPED) set in their size fields. Because they are

allocated one-by-one, each must contain its own trailing size field.

*/

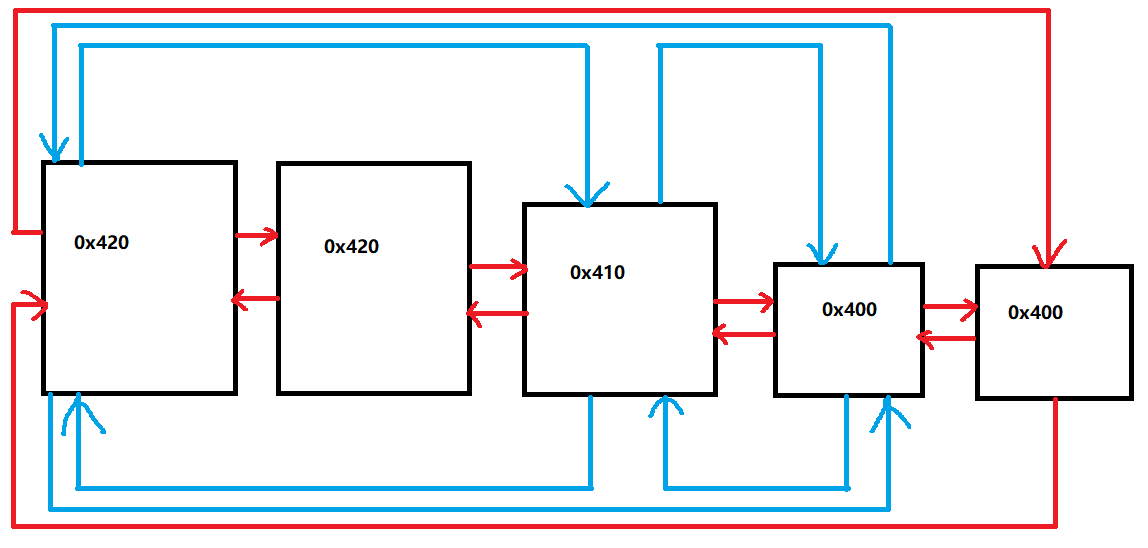

bins

-

bins的插入方式是 FIFO

-

大小小于 512 的所有 bin 之间 size 的间隔为0x8,而超过 512 的 bin 为 largebin ,largebin 之间 size 的差距就不一定是 0x8 了,具体可以查看定义

-

fastbin 是单向链表

-

smallbin 是双向循环链表

-

largebin 的管理方式有所不同。一个 largebin 中可以存放 size 处于某个区间内的多种 chunk

largebin 结构在 smallbin (图中红色的指针)的基础上增加了 fd_nextsize、bk_nextsize,分别指向 size 比自己 小/大 的

堆头(图中蓝色的指针) -

大小超过1MB的空间使用 mmap 进行分配,故不会用bin进行管理

/*

Bins

An array of bin headers for free chunks. Each bin is doubly

linked. The bins are approximately proportionally (log) spaced.

There are a lot of these bins (128). This may look excessive, but

works very well in practice. Most bins hold sizes that are

unusual as malloc request sizes, but are more usual for fragments

and consolidated sets of chunks, which is what these bins hold, so

they can be found quickly. All procedures maintain the invariant

that no consolidated chunk physically borders another one, so each

chunk in a list is known to be preceeded and followed by either

inuse chunks or the ends of memory.

Chunks in bins are kept in size order, with ties going to the

approximately least recently used chunk. Ordering isn't needed

for the small bins, which all contain the same-sized chunks, but

facilitates best-fit allocation for larger chunks. These lists

are just sequential. Keeping them in order almost never requires

enough traversal to warrant using fancier ordered data

structures.

Chunks of the same size are linked with the most

recently freed at the front, and allocations are taken from the

back. This results in LRU (FIFO) allocation order, which tends

to give each chunk an equal opportunity to be consolidated with

adjacent freed chunks, resulting in larger free chunks and less

fragmentation.

To simplify use in double-linked lists, each bin header acts

as a malloc_chunk. This avoids special-casing for headers.

But to conserve space and improve locality, we allocate

only the fd/bk pointers of bins, and then use repositioning tricks

to treat these as the fields of a malloc_chunk*.

*/

// --------------------------------------------

/*

Indexing

Bins for sizes < 512 bytes contain chunks of all the same size, spaced

8 bytes apart. Larger bins are approximately logarithmically spaced:

64 bins of size 8

32 bins of size 64

16 bins of size 512

8 bins of size 4096

4 bins of size 32768

2 bins of size 262144

1 bin of size what's left

There is actually a little bit of slop in the numbers in bin_index

for the sake of speed. This makes no difference elsewhere.

The bins top out around 1MB because we expect to service large

requests via mmap.

Bin 0 does not exist. Bin 1 is the unordered list; if that would be

a valid chunk size the small bins are bumped up one.

*/MAX_FAST_SIZE

用来定义 fastbin 和 smallbin 的界限,未超过 max_fast_size 的 bin 一律视为 fastbin。

如果使用某种手段将该变量修改,让系统把其他内存也当作 fastbin,那么就能造成任意地址写。

/* The maximum fastbin request size we support */

#define MAX_FAST_SIZE (80 * SIZE_SZ / 4)相关宏与函数

fastbin_index

fastbin 的 index 计算宏。

从这个宏我们可以看到,64位机上 size的后4位是可忽略的,(32位机后3位可忽略)。也就是说,size在这个范围内的都可以被当作同一个fast_chunk

e.g.

(0x21>>4)-2 == 0

(0x28>>4)-2 == 0

0x21和0x28的堆块被当作同一类fastbin

/* offset 2 to use otherwise unindexable first 2 bins */

#define fastbin_index(sz) \

((((unsigned int) (sz)) >> (SIZE_SZ == 8 ? 4 : 3)) - 2)

smallbin_index

smallbin 的 index 计算宏。

也是后三位可以忽略

#define smallbin_index(sz) \

((SMALLBIN_WIDTH == 16 ? (((unsigned) (sz)) >> 4) : (((unsigned) (sz)) >> 3))\

+ SMALLBIN_CORRECTION)unlink

用于将一个 bin 链表上面的 chunk(p) 取出,其中会进行以下检测:

- p->fd->bk == p && p->bk->fd == p (防止伪造双向链表)

- largebin 检测较为复杂

对于非堆头的 large_chunk,直接忽略该步骤;

对于是堆头的 large chunk,判断 p->fd_nextsize->bk_nextsize == p && p->bk_nextsize->fd_nextsize == p

a. 如果该 chunk 是当前 size 的唯一堆块,那么就直接 unlink

b. 如果该 chunk 后面有相同 size 的堆块,那么就把堆头让给下一个堆块(p->fd),再 unlink

/* Take a chunk off a bin list */

#define unlink(AV, P, BK, FD) { \

FD = P->fd; \

BK = P->bk; \

if (__builtin_expect (FD->bk != P || BK->fd != P, 0)) \

malloc_printerr (check_action, "corrupted double-linked list", P, AV); \

else { \

FD->bk = BK; \

BK->fd = FD; \

if (!in_smallbin_range (P->size) \

&& __builtin_expect (P->fd_nextsize != NULL, 0)) { \

if (__builtin_expect (P->fd_nextsize->bk_nextsize != P, 0) \

|| __builtin_expect (P->bk_nextsize->fd_nextsize != P, 0)) \

malloc_printerr (check_action, \

"corrupted double-linked list (not small)", \

P, AV); \

if (FD->fd_nextsize == NULL) { \

if (P->fd_nextsize == P) \

FD->fd_nextsize = FD->bk_nextsize = FD; \

else { \

FD->fd_nextsize = P->fd_nextsize; \

FD->bk_nextsize = P->bk_nextsize; \

P->fd_nextsize->bk_nextsize = FD; \

P->bk_nextsize->fd_nextsize = FD; \

} \

} else { \

P->fd_nextsize->bk_nextsize = P->bk_nextsize; \

P->bk_nextsize->fd_nextsize = P->fd_nextsize; \

} \

} \

} \

}malloc_consolidate

一个用于合并 fastbin 为 unsorted bin 的函数,仅在特定的条件下才会被调用。

当没有合适的 small_bin 创建条件时,可以通过这个函数来制造 unsorted chunk 来达到 leak libc 的效果。

调用条件

各种初始化

比如 malloc small_chunk 时,如果某个 small_bin 还未初始化,就会调用其进行初始化。这里就不赘述了。

malloc large chunk

malloc large_chunk 时,如果还存在 fastbin,那么系统就会让它进行合并操作。

/*

If this is a large request, consolidate fastbins before continuing.

While it might look excessive to kill all fastbins before

even seeing if there is space available, this avoids

fragmentation problems normally associated with fastbins.

Also, in practice, programs tend to have runs of either small or

large requests, but less often mixtures, so consolidation is not

invoked all that often in most programs. And the programs that

it is called frequently in otherwise tend to fragment.

*/

else

{

idx = largebin_index (nb);

if (have_fastchunks (av))

malloc_consolidate (av);

}malloc top_chunk 且空间不够

如果程序到了要分配 top_chunk 的地步,就会先判断 top_chunk 是否够分配。

不够分配的话,就会调用 malloc_consolidate 函数

if ((unsigned long) (size) >= (unsigned long) (nb + MINSIZE))

{

remainder_size = size - nb;

remainder = chunk_at_offset (victim, nb);

av->top = remainder;

set_head (victim, nb | PREV_INUSE |

(av != &main_arena ? NON_MAIN_ARENA : 0));

set_head (remainder, remainder_size | PREV_INUSE);

check_malloced_chunk (av, victim, nb);

void *p = chunk2mem (victim);

alloc_perturb (p, bytes);

return p;

}

/* When we are using atomic ops to free fast chunks we can get

here for all block sizes. */

else if (have_fastchunks (av))

{

malloc_consolidate (av);

/* restore original bin index */

if (in_smallbin_range (nb))

idx = smallbin_index (nb);

else

idx = largebin_index (nb);

}After free, chunk_size > FASTBIN_CONSOLIDATION_THRESHOLD

FASTBIN_CONSOLIDATION_THRESHOLD 也就是 65536

当 free 操作进行完成之后,如果得到的堆块大小大于 65536,就会调用 malloc_consoliadte

其实这相当于 free 了一个 large_chunk

/*

If freeing a large space, consolidate possibly-surrounding

chunks. Then, if the total unused topmost memory exceeds trim

threshold, ask malloc_trim to reduce top.

Unless max_fast is 0, we don't know if there are fastbins

bordering top, so we cannot tell for sure whether threshold

has been reached unless fastbins are consolidated. But we

don't want to consolidate on each free. As a compromise,

consolidation is performed if FASTBIN_CONSOLIDATION_THRESHOLD

is reached.

*/

if ((unsigned long)(size) >= FASTBIN_CONSOLIDATION_THRESHOLD) {

if (have_fastchunks(av))

malloc_consolidate(av);源码分析

static void malloc_consolidate(mstate av)

{

mfastbinptr* fb; /* current fastbin being consolidated */

mfastbinptr* maxfb; /* last fastbin (for loop control) */

mchunkptr p; /* current chunk being consolidated */

mchunkptr nextp; /* next chunk to consolidate */

mchunkptr unsorted_bin; /* bin header */

mchunkptr first_unsorted; /* chunk to link to */

/* These have same use as in free() */

mchunkptr nextchunk;

INTERNAL_SIZE_T size;

INTERNAL_SIZE_T nextsize;

INTERNAL_SIZE_T prevsize;

int nextinuse;

mchunkptr bck;

mchunkptr fwd;

/*

If max_fast is 0, we know that av hasn't

yet been initialized, in which case do so below

*/

if (get_max_fast () != 0) { // 判断 fastbin 是否被初始化

clear_fastchunks(av);

unsorted_bin = unsorted_chunks(av);

/*

Remove each chunk from fast bin and consolidate it, placing it

then in unsorted bin. Among other reasons for doing this,

placing in unsorted bin avoids needing to calculate actual bins

until malloc is sure that chunks aren't immediately going to be

reused anyway.

*/

maxfb = &fastbin (av, NFASTBINS - 1);

fb = &fastbin (av, 0);

do {

p = atomic_exchange_acq (fb, 0); // 遍历每一个 fastbin 链

if (p != 0) {

do {

check_inuse_chunk(av, p); // 遍历 这个链中的每一个大小相同的 fastbin 块

nextp = p->fd;

/* Slightly streamlined version of consolidation code in free() */

size = p->size & ~(PREV_INUSE|NON_MAIN_ARENA);

nextchunk = chunk_at_offset(p, size);

nextsize = chunksize(nextchunk);

if (!prev_inuse(p)) { // 查看是否可以向后合并,并且合并

prevsize = p->prev_size;

size += prevsize;

p = chunk_at_offset(p, -((long) prevsize));

unlink(av, p, bck, fwd);

}

if (nextchunk != av->top) { // 判断向前是否和 top_chunk 相邻

nextinuse = inuse_bit_at_offset(nextchunk, nextsize);

if (!nextinuse) { // 判断前面的堆块是否使用中,并向前合并

size += nextsize;

unlink(av, nextchunk, bck, fwd);

} else

clear_inuse_bit_at_offset(nextchunk, 0);

first_unsorted = unsorted_bin->fd;

unsorted_bin->fd = p;

first_unsorted->bk = p;

if (!in_smallbin_range (size)) {

p->fd_nextsize = NULL;

p->bk_nextsize = NULL;

}

set_head(p, size | PREV_INUSE);

p->bk = unsorted_bin; // 将堆块放入 unsorted_bin

p->fd = first_unsorted;

set_foot(p, size);

}

else { // 如果和 top_chunk 相邻,则直接合并并且返回

size += nextsize;

set_head(p, size | PREV_INUSE);

av->top = p;

}

} while ( (p = nextp) != 0);

}

} while (fb++ != maxfb);

}

else {

malloc_init_state(av);

check_malloc_state(av);

}

}具体的分析可以参考这篇文章

https://blog.csdn.net/Plus_RE/article/details/79265805

__libc_malloc

这是调用 malloc 的时候首次会来到的入口函数

- 读取了 malloc_hook 的值,如果不为0则跳转(可以通过其劫持控制流)

查看tcache是否有合适chunk(libc >= 2.27)

void *

__libc_malloc (size_t bytes)

{

mstate ar_ptr;

void *victim;

void *(*hook) (size_t, const void *)

= atomic_forced_read (__malloc_hook);

if (__builtin_expect (hook != NULL, 0))

return (*hook)(bytes, RETURN_ADDRESS (0));

arena_get (ar_ptr, bytes);

victim = _int_malloc (ar_ptr, bytes);

/* Retry with another arena only if we were able to find a usable arena

before. */

if (!victim && ar_ptr != NULL)

{

LIBC_PROBE (memory_malloc_retry, 1, bytes);

ar_ptr = arena_get_retry (ar_ptr, bytes);

victim = _int_malloc (ar_ptr, bytes);

}

if (ar_ptr != NULL)

(void) mutex_unlock (&ar_ptr->mutex);

assert (!victim || chunk_is_mmapped (mem2chunk (victim)) ||

ar_ptr == arena_for_chunk (mem2chunk (victim)));

return victim;

}

libc_hidden_def (__libc_malloc)_int_malloc

nb 变量是申请的内存大小对齐之后的值

函数会对 nb 的大小进行判断,依次调用不同的逻辑进行初始化。

fastbin

- 根据 fb 取出对应 fastbin 的链表头的 chunk(如果有)

- 检测从 fastbin 中取出的 chunk 是否符合其对应的 size

检测从 fastbin 中取出的 chunk 是否内存对齐,这一步使得 fastbin attack 失效(libc >= 2.27)该双向链表中的其他 chunk,会被尽可能地放入 tcache (libc >= 2.27)

/*

If the size qualifies as a fastbin, first check corresponding bin.

This code is safe to execute even if av is not yet initialized, so we

can try it without checking, which saves some time on this fast path.

*/

if ((unsigned long) (nb) <= (unsigned long) (get_max_fast ()))

{

idx = fastbin_index (nb); // 获取fastbin对应的idx(由nb计算)

mfastbinptr *fb = &fastbin (av, idx); // 把该idx下的fastbin的第一个chunk取出

mchunkptr pp = *fb;

do

{

victim = pp;

if (victim == NULL)

break;

}

while ((pp = catomic_compare_and_exchange_val_acq (fb, victim->fd, victim))

!= victim);

if (victim != 0) // 如果fastbin下确实有空chunk

{

// 检测取出的victim是否符合该fastbin的idx

if (__builtin_expect (fastbin_index (chunksize (victim)) != idx, 0))

{

errstr = "malloc(): memory corruption (fast)";

errout:

malloc_printerr (check_action, errstr, chunk2mem (victim), av);

return NULL;

}

check_remalloced_chunk (av, victim, nb);

void *p = chunk2mem (victim);

alloc_perturb (p, bytes);

return p;

}

}smallbin

- 根据 fb 取出对应 smallbin 的链表头的 chunk(如果有)

- 检测从 smallbin 中取出的 victim, victim->bk->fd == victim (防止伪造双向链表)

检测从 smallbin 中取出的 chunk 是否符合其对应的 size(libc >= 2.27)该双向链表中的其他 chunk,会被尽可能地放入 tcache(libc >= 2.27)

/*

If a small request, check regular bin. Since these "smallbins"

hold one size each, no searching within bins is necessary.

(For a large request, we need to wait until unsorted chunks are

processed to find best fit. But for small ones, fits are exact

anyway, so we can check now, which is faster.)

*/

if (in_smallbin_range (nb))

{

idx = smallbin_index (nb);

bin = bin_at (av, idx); // 定位idx对应的 smallbin 链表头

if ((victim = last (bin)) != bin) // 将双向循环链表的最后一个chunk与表头比较,若相同则说明链表为空

{

if (victim == 0) /* initialization check */

malloc_consolidate (av);

else

{

bck = victim->bk;

if (__glibc_unlikely (bck->fd != victim)) // 检测victim->bk->fd是否为victim

{

errstr = "malloc(): smallbin double linked list corrupted";

goto errout;

}

set_inuse_bit_at_offset (victim, nb); // 构造size位的in_use

bin->bk = bck; // 从smallbin中取出victim(作用相当于unlink)

bck->fd = bin;

if (av != &main_arena)

victim->size |= NON_MAIN_ARENA;

check_malloced_chunk (av, victim, nb);

void *p = chunk2mem (victim);

alloc_perturb (p, bytes);

return p;

}

}

}largebin

记要申请chunk的大小为 required_size

- 判断 required_size 是否小于等于 top->fd(largebin 中最大的一个块),如果不符合,则没有合适堆块可以分配,便会开始查找 unsorted bin。

- 反向遍历 victim = victim->bk ,寻找第一个 victim->size >= required_size 的 large_chunk,即为

target_chunk - unlink target chunk(若 target_chunk 为

堆头,则 unlink target_chunk->fd) - 切割 target_chunk,并将切割后的部分放入 unsorted bin 中(如果切割后的小于 MINSIZE,则不切割)

/*

If a large request, scan through the chunks of current bin in

sorted order to find smallest that fits. Use the skip list for this.

*/

if (!in_smallbin_range (nb))

{

bin = bin_at (av, idx); //找到申请的size对应的largebin链表

/* skip scan if empty or largest chunk is too small */

if ((victim = first (bin)) != bin &&

(unsigned long) (victim->size) >= (unsigned long) (nb))

//第一步,判断链表的第一个结点,即最大的chunk是否大于要申请的size

{

victim = victim->bk_nextsize;

while (((unsigned long) (size = chunksize (victim)) <

(unsigned long) (nb)))

//第二步,从最小的chunk开始,反向遍历 chunk size链表,直到找到第一个大于等于所需chunk大小的chunk退出循环

victim = victim->bk_nextsize;

/* Avoid removing the first entry for a size so that the skip

list does not have to be rerouted. */

if (victim != last (bin) && victim->size == victim->fd->size)

victim = victim->fd;

//第三步,申请的chunk对应的chunk存在多个结点,则申请相应堆头的下个结点,不申请堆头。

remainder_size = size - nb;

unlink (av, victim, bck, fwd); //第四步,largebin unlink 操作

/* Exhaust */

if (remainder_size < MINSIZE) //第五步,如果剩余的空间小于MINSIZE,则将该空间直接给用户

{

set_inuse_bit_at_offset (victim, size);

if (av != &main_arena)

victim->size |= NON_MAIN_ARENA;

}

/* Split */

else

{

remainder = chunk_at_offset (victim, nb);

//第六步,如果当前剩余空间还可以构成chunk,则将剩余的空间放入到unsorted bin中。

/* We cannot assume the unsorted list is empty and therefore

have to perform a complete insert here. */

bck = unsorted_chunks (av);

fwd = bck->fd;

if (__glibc_unlikely (fwd->bk != bck))

{

errstr = "malloc(): corrupted unsorted chunks";

goto errout;

}

remainder->bk = bck;

remainder->fd = fwd;

bck->fd = remainder;

fwd->bk = remainder;

if (!in_smallbin_range (remainder_size))

{

remainder->fd_nextsize = NULL;

remainder->bk_nextsize = NULL;

}

set_head (victim, nb | PREV_INUSE |

(av != &main_arena ? NON_MAIN_ARENA : 0));

set_head (remainder, remainder_size | PREV_INUSE);

set_foot (remainder, remainder_size);

}

check_malloced_chunk (av, victim, nb);

void *p = chunk2mem (victim);

alloc_perturb (p, bytes);

return p;

}

}unsorted bin

如果前面的 bin 都没有被匹配,那么程序就会从 unsorted bin 中寻找 chunk

-

搜索unsorted bin的顺序是从最后一个堆块开始往前找

-

程序并没有对 unsorted bin 的 bk 指针进行校验,易触发 unsorted bin attack

-

其他不符合要求的 chunk,会被放入相应的 bin 中

-

fastbin 和 smallbin 的存放方式都是 FILO,这个没问题。但是 largebin 的存放方式与它们稍显不同:

a. 当 largebin.size 唯一(在 largebin 中没有比它更早的堆块)时,它会作为堆头。

b. 如果这个 largebin 中已经有了它的同类 chunk(size 相同),那么它会以FILO的方式插入到堆头的下一个堆块的位置(head->fd = P) -

这里要注意, large_chunk 不论它被放入 unsorted bin 的顺序,它最终在 large_bin 中一定是 大size在前,小size在后的。

int iters = 0;

while ((victim = unsorted_chunks (av)->bk) != unsorted_chunks (av))

{

bck = victim->bk;

if (__builtin_expect (victim->size <= 2 * SIZE_SZ, 0)

|| __builtin_expect (victim->size > av->system_mem, 0))

malloc_printerr (check_action, "malloc(): memory corruption",

chunk2mem (victim), av);

size = chunksize (victim);

/*

If a small request, try to use last remainder if it is the

only chunk in unsorted bin. This helps promote locality for

runs of consecutive small requests. This is the only

exception to best-fit, and applies only when there is

no exact fit for a small chunk.

*/

if (in_smallbin_range (nb) &&

bck == unsorted_chunks (av) &&

victim == av->last_remainder &&

(unsigned long) (size) > (unsigned long) (nb + MINSIZE))

{

/* split and reattach remainder */

remainder_size = size - nb;

remainder = chunk_at_offset (victim, nb);

unsorted_chunks (av)->bk = unsorted_chunks (av)->fd = remainder;

av->last_remainder = remainder;

remainder->bk = remainder->fd = unsorted_chunks (av);

if (!in_smallbin_range (remainder_size))

{

remainder->fd_nextsize = NULL;

remainder->bk_nextsize = NULL;

}

set_head (victim, nb | PREV_INUSE |

(av != &main_arena ? NON_MAIN_ARENA : 0));

set_head (remainder, remainder_size | PREV_INUSE);

set_foot (remainder, remainder_size);

check_malloced_chunk (av, victim, nb);

void *p = chunk2mem (victim);

alloc_perturb (p, bytes);

return p;

}

/* remove from unsorted list */

unsorted_chunks (av)->bk = bck;

bck->fd = unsorted_chunks (av);

/* Take now instead of binning if exact fit */

if (size == nb)

{

set_inuse_bit_at_offset (victim, size);

if (av != &main_arena)

victim->size |= NON_MAIN_ARENA;

check_malloced_chunk (av, victim, nb);

void *p = chunk2mem (victim);

alloc_perturb (p, bytes);

return p;

}

/* place chunk in bin */

if (in_smallbin_range (size))

{

victim_index = smallbin_index (size);

bck = bin_at (av, victim_index);

fwd = bck->fd;

}

else

{

victim_index = largebin_index (size);

bck = bin_at (av, victim_index);

fwd = bck->fd;

/* maintain large bins in sorted order */

if (fwd != bck)

{

/* Or with inuse bit to speed comparisons */

size |= PREV_INUSE;

/* if smaller than smallest, bypass loop below */

assert ((bck->bk->size & NON_MAIN_ARENA) == 0);

if ((unsigned long) (size) < (unsigned long) (bck->bk->size))

{

fwd = bck;

bck = bck->bk;

victim->fd_nextsize = fwd->fd;

victim->bk_nextsize = fwd->fd->bk_nextsize;

fwd->fd->bk_nextsize = victim->bk_nextsize->fd_nextsize = victim;

}

else

{

assert ((fwd->size & NON_MAIN_ARENA) == 0);

while ((unsigned long) size < fwd->size)

{

fwd = fwd->fd_nextsize;

assert ((fwd->size & NON_MAIN_ARENA) == 0);

}

if ((unsigned long) size == (unsigned long) fwd->size)

/* Always insert in the second position. */

fwd = fwd->fd;

else

{

victim->fd_nextsize = fwd;

victim->bk_nextsize = fwd->bk_nextsize;

fwd->bk_nextsize = victim;

victim->bk_nextsize->fd_nextsize = victim;

}

bck = fwd->bk;

}

}

else

victim->fd_nextsize = victim->bk_nextsize = victim;

}

mark_bin (av, victim_index);

victim->bk = bck;

victim->fd = fwd;

fwd->bk = victim;

bck->fd = victim;

#define MAX_ITERS 10000

if (++iters >= MAX_ITERS)

break;

}split

如果 unsorted bin 都没有找到 chunk,glibc 就会考虑从 top chunk 中切割 chunk 再返回,具体过程暂不展开分析了(技术不够)

__libc_free

这是调用 free 的时候首次会来到的入口函数

- 读取了 free的值,如果不为0则跳转(可以通过其劫持控制流)

- 如果是 mmap 申请的内存,那么单独处理,与 bin 使用不同处理方式

查看tcache是否有合适的空位(libc >= 2.27)

void

__libc_free (void *mem)

{

mstate ar_ptr;

mchunkptr p; /* chunk corresponding to mem */

void (*hook) (void *, const void *)

= atomic_forced_read (__free_hook);

if (__builtin_expect (hook != NULL, 0))

{

(*hook)(mem, RETURN_ADDRESS (0));

return;

}

if (mem == 0) /* free(0) has no effect */

return;

p = mem2chunk (mem);

if (chunk_is_mmapped (p)) /* release mmapped memory. */

{

/* see if the dynamic brk/mmap threshold needs adjusting */

if (!mp_.no_dyn_threshold

&& p->size > mp_.mmap_threshold

&& p->size <= DEFAULT_MMAP_THRESHOLD_MAX)

{

mp_.mmap_threshold = chunksize (p);

mp_.trim_threshold = 2 * mp_.mmap_threshold;

LIBC_PROBE (memory_mallopt_free_dyn_thresholds, 2,

mp_.mmap_threshold, mp_.trim_threshold);

}

munmap_chunk (p);

return;

}

ar_ptr = arena_for_chunk (p);

_int_free (ar_ptr, p, 0);

}

libc_hidden_def (__libc_free)_int_free

definition

/*

------------------------------ free ------------------------------

*/

static void

_int_free (mstate av, mchunkptr p, int have_lock)

{

INTERNAL_SIZE_T size; /* its size */

mfastbinptr *fb; /* associated fastbin */

mchunkptr nextchunk; /* next contiguous chunk */

INTERNAL_SIZE_T nextsize; /* its size */

int nextinuse; /* true if nextchunk is used */

INTERNAL_SIZE_T prevsize; /* size of previous contiguous chunk */

mchunkptr bck; /* misc temp for linking */

mchunkptr fwd; /* misc temp for linking */

size = chunksize (p);

/* Little security check which won't hurt performance: the

allocator never wrapps around at the end of the address space.

Therefore we can exclude some size values which might appear

here by accident or by "design" from some intruder. */

if (__builtin_expect ((uintptr_t) p > (uintptr_t) -size, 0)

|| __builtin_expect (misaligned_chunk (p), 0))

malloc_printerr ("free(): invalid pointer");

/* We know that each chunk is at least MINSIZE bytes in size or a

multiple of MALLOC_ALIGNMENT. */

if (__glibc_unlikely (size < MINSIZE || !aligned_OK (size)))

malloc_printerr ("free(): invalid size");

check_inuse_chunk(av, p); // 查看inuse位是否为1tcache

(libc 2.23 不存在 tcache,请跳过这部分)

若libc版本拥有tcache(libc >=2.27) ,并且tcache 有空位,那么就将该chunk直接放入tcachetcache 只对链表头的chunk 和 free_chunk 进行比较,检测double freetcache 的容量是 7 个 chunk

#if USE_TCACHE // 如果有 tcache

{

size_t tc_idx = csize2tidx (size); // 取 tcache_idx

if (tcache != NULL && tc_idx < mp_.tcache_bins) // 检测 tcache 是否在范围内

{

/* Check to see if it's already in the tcache. */

tcache_entry *e = (tcache_entry *) chunk2mem (p);

/* This test succeeds on double free. However, we don't 100%

trust it (it also matches random payload data at a 1 in

2^<size_t> chance), so verify it's not an unlikely

coincidence before aborting. */

if (__glibc_unlikely (e->key == tcache))

{

tcache_entry *tmp;

LIBC_PROBE (memory_tcache_double_free, 2, e, tc_idx);

for (tmp = tcache->entries[tc_idx];

tmp;

tmp = REVEAL_PTR (tmp->next))

{

if (__glibc_unlikely (!aligned_OK (tmp)))

malloc_printerr ("free(): unaligned chunk detected in tcache 2");

if (tmp == e)

malloc_printerr ("free(): double free detected in tcache 2");

/* If we get here, it was a coincidence. We've wasted a

few cycles, but don't abort. */

}

}

if (tcache->counts[tc_idx] < mp_.tcache_count) // 如果 tcache 未满,那么就把新的 free_chunk 放入

{

tcache_put (p, tc_idx);

return;

}

}

}

#endiffastbin

-

根据我的调试,TRIM_FASTBINS 默认是关闭的,也就是说靠近 top chunk 的 fastbin 也不会和它合并。

-

检测 size 的大小,过于小( <=2 * SIZE_SZ)或者过于大(av->system_mem)都会报错

-

检测是否 double free

/*

If eligible, place chunk on a fastbin so it can be found

and used quickly in malloc.

*/

if ((unsigned long)(size) <= (unsigned long)(get_max_fast ())

#if TRIM_FASTBINS

/*

If TRIM_FASTBINS set, don't place chunks

bordering top into fastbins

*/

&& (chunk_at_offset(p, size) != av->top)

#endif

) {

if (__builtin_expect (chunk_at_offset (p, size)->size <= 2 * SIZE_SZ, 0)

|| __builtin_expect (chunksize (chunk_at_offset (p, size))

>= av->system_mem, 0))

{

/* We might not have a lock at this point and concurrent modifications

of system_mem might have let to a false positive. Redo the test

after getting the lock. */

if (have_lock

|| ({ assert (locked == 0);

mutex_lock(&av->mutex);

locked = 1;

/*检测size大小是否合法*/

chunk_at_offset (p, size)->size <= 2 * SIZE_SZ

|| chunksize (chunk_at_offset (p, size)) >= av->system_mem;

}))

{

errstr = "free(): invalid next size (fast)";

goto errout;

}

if (! have_lock)

{

(void)mutex_unlock(&av->mutex);

locked = 0;

}

}

free_perturb (chunk2mem(p), size - 2 * SIZE_SZ);

set_fastchunks(av);

unsigned int idx = fastbin_index(size);

fb = &fastbin (av, idx);

/* Atomically link P to its fastbin: P->FD = *FB; *FB = P; */

mchunkptr old = *fb, old2;

unsigned int old_idx = ~0u;

do

{

/* Check that the top of the bin is not the record we are going to add

(i.e., double free). */

if (__builtin_expect (old == p, 0))

{

errstr = "double free or corruption (fasttop)";

goto errout;

}

/* Check that size of fastbin chunk at the top is the same as

size of the chunk that we are adding. We can dereference OLD

only if we have the lock, otherwise it might have already been

deallocated. See use of OLD_IDX below for the actual check. */

// 新加入fastbin 的chunk与之前的old chunk进行链表连接

if (have_lock && old != NULL)

old_idx = fastbin_index(chunksize(old));

p->fd = old2 = old;

}

while ((old = catomic_compare_and_exchange_val_rel (fb, p, old2)) != old2);

if (have_lock && old != NULL && __builtin_expect (old_idx != idx, 0))

{

errstr = "invalid fastbin entry (free)";

goto errout;

}

}

check

- free_chunk 不能是 top chunk

- free_chunk 的 next_chunk 是否在堆的边界之内

- free_chunk 的 next_chunk 的

prev_inuse必须为 1 (否则就double free了)

nextchunk = chunk_at_offset(p, size);

/* Lightweight tests: check whether the block is already the

top block. */

if (__glibc_unlikely (p == av->top))

{

errstr = "double free or corruption (top)";

goto errout;

}

/* Or whether the next chunk is beyond the boundaries of the arena. */

if (__builtin_expect (contiguous (av)

&& (char *) nextchunk

>= ((char *) av->top + chunksize(av->top)), 0))

{

errstr = "double free or corruption (out)";

goto errout;

}

/* Or whether the block is actually not marked used. */

if (__glibc_unlikely (!prev_inuse(nextchunk)))

{

errstr = "double free or corruption (!prev)";

goto errout;

}unlink

这一部分会检测 free_chunk 的前(后)chunk 是否已经被 free,若条件允许,则将当前的 chunk 与其邻接的空间合并。

- p 与 prev_chunk 的合并

- p 与 next_chunk 的合并

它们计算 prev_chunk 和 next_chunk 的途径是 prev_size 和 size,所以如果能修改这两个地址,那么就能进行 unlink 攻击

nextsize = chunksize(nextchunk);

if (__builtin_expect (nextchunk->size <= 2 * SIZE_SZ, 0)

|| __builtin_expect (nextsize >= av->system_mem, 0))

{

errstr = "free(): invalid next size (normal)";

goto errout;

}

free_perturb (chunk2mem(p), size - 2 * SIZE_SZ);

/* consolidate backward */

if (!prev_inuse(p)) { // 如果 p 之前的 chunk 未被使用

prevsize = p->prev_size;

size += prevsize;

p = chunk_at_offset(p, -((long) prevsize));

unlink(av, p, bck, fwd); // 则调用 unlink 宏对这两个空间进行合并

}

if (nextchunk != av->top) { // 如果 p 之后的 chunk 不是 top chunk

/* get and clear inuse bit */

nextinuse = inuse_bit_at_offset(nextchunk, nextsize);

/* consolidate forward */

if (!nextinuse) { // 如果 p 之后的 chunk 未被使用

unlink(av, nextchunk, bck, fwd); // 则调用 unlink 宏对这两个空间进行合并

size += nextsize;

} else

clear_inuse_bit_at_offset(nextchunk, 0);small_chunk

- 看此 chunk 与 top chunk 的相对位置,若他们相邻,则直接合并

- 否则将其加入 unsorted bin 中

/*

Place the chunk in unsorted chunk list. Chunks are

not placed into regular bins until after they have

been given one chance to be used in malloc.

*/

bck = unsorted_chunks(av);

fwd = bck->fd;

if (__glibc_unlikely (fwd->bk != bck))

{

errstr = "free(): corrupted unsorted chunks";

goto errout;

}

p->fd = fwd;

p->bk = bck;

if (!in_smallbin_range(size))

{

p->fd_nextsize = NULL;

p->bk_nextsize = NULL;

}

bck->fd = p;

fwd->bk = p;

set_head(p, size | PREV_INUSE);

set_foot(p, size);

check_free_chunk(av, p);

}

/*

If the chunk borders the current high end of memory,

consolidate into top

*/

else { // 如果 chunk 与 top chunk 相邻,则直接合并

size += nextsize;

set_head(p, size | PREV_INUSE);

av->top = p;

check_chunk(av, p);

}large bin

存档,暂不分析

和 small_chunk 类似,被 free 掉之后会进入 unsorted bin,在下次整理 unsorted bin 时,这个 chunk 才有可能进入 large bin,具体请看 unsorted bin 的 malloc 部分。

唯一需要注意的是,large_chunk 掉入 unsorted bin 时,fd_nextsize 和 bk_nextsize 都会被置零。

if (!in_smallbin_range(size))

{

p->fd_nextsize = NULL;

p->bk_nextsize = NULL;

}

Comments | NOTHING